01 Intro

One of the favorite activities of tech analysts, VCs and similar Twitter armchair experts is to predict what the next big technology platform might be.

The usual suspects that come up in these conversations are VR/AR, crypto, smart speakers and similar IoT devices. A new contestant that I’ve seen come up more frequently in these debates recently are Apple’s AirPods.

Calling AirPods “the next big platform” is interesting because at the moment, they are not even a small platform. They are no platform at all. They are just a piece of hardware.

But that doesn’t mean they can’t become platform.

02 What is a platform?

Let’s first take a look at what a platform actually is.

At its core, a platform is something that others can build on top of. A classic example would be an operating system like iOS: By providing a set of APIs, Apple created a playground for developers to build and run applications on. In fact, new input capabilities such as the touch interface, gyroscope sensor and camera allowed developers to create unique applications that weren’t possible before.

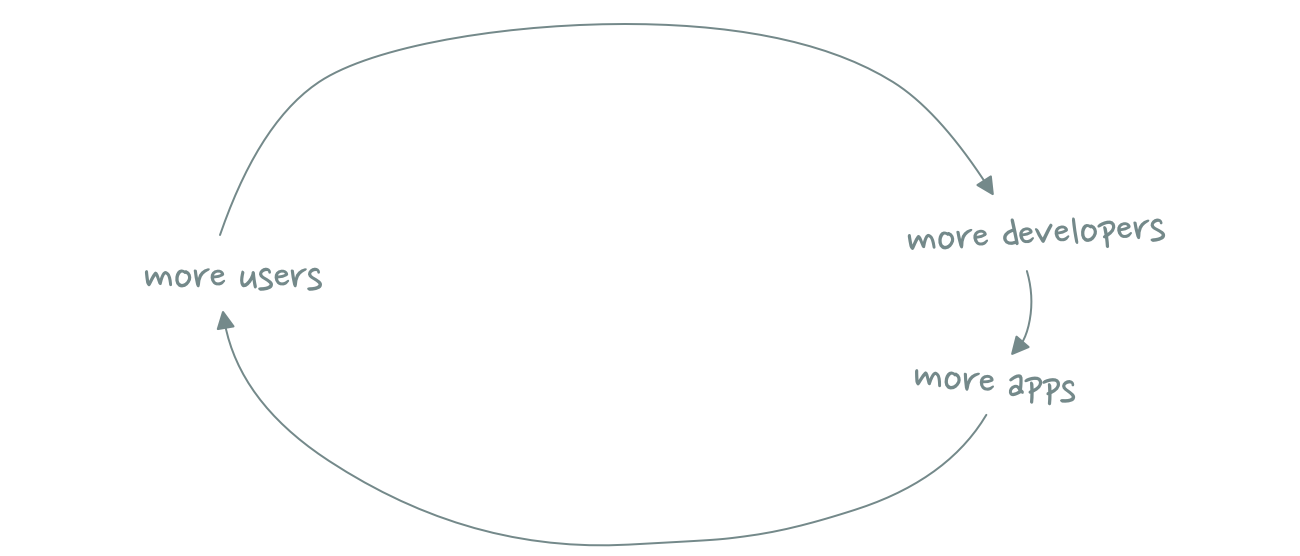

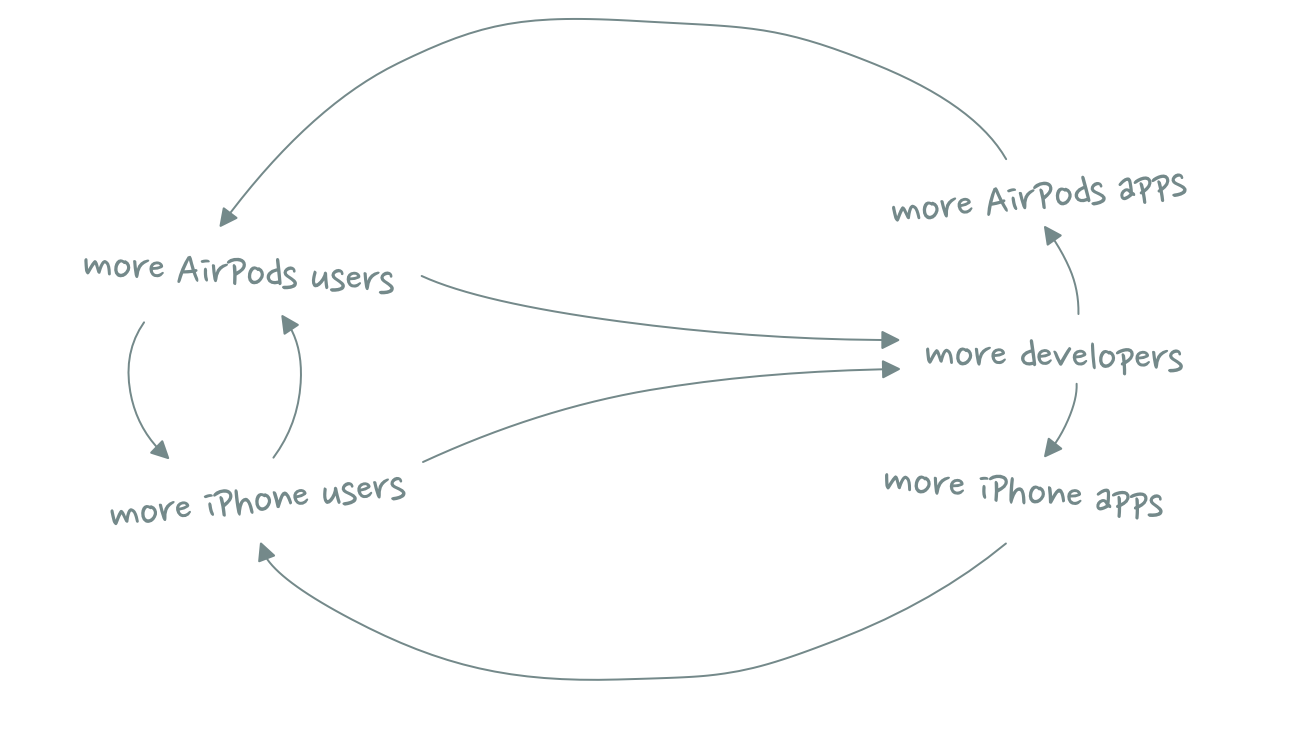

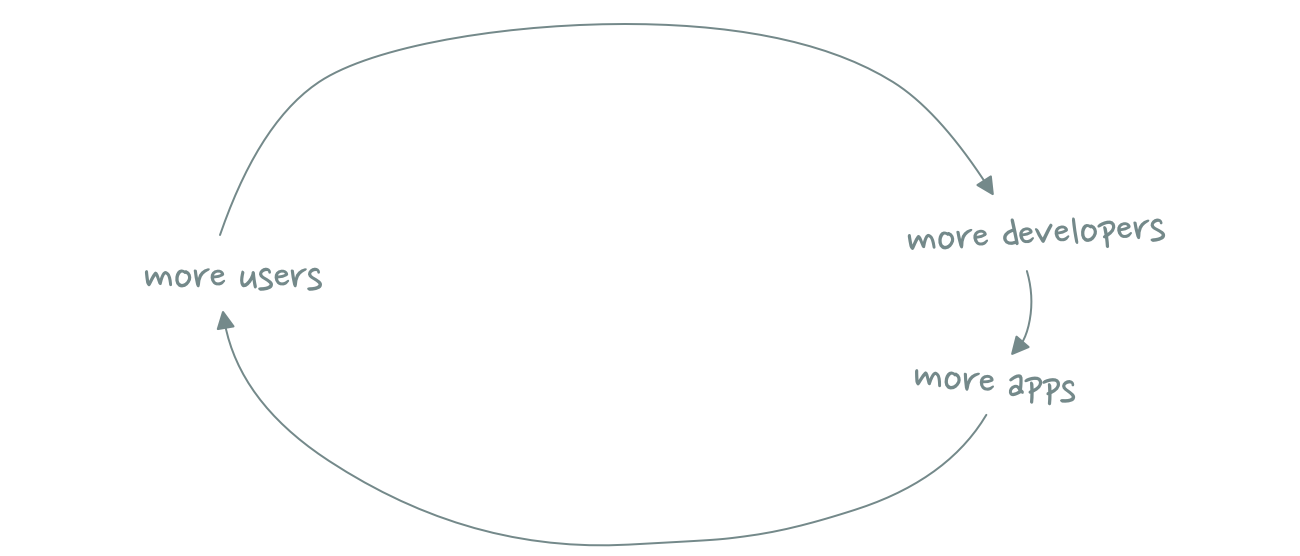

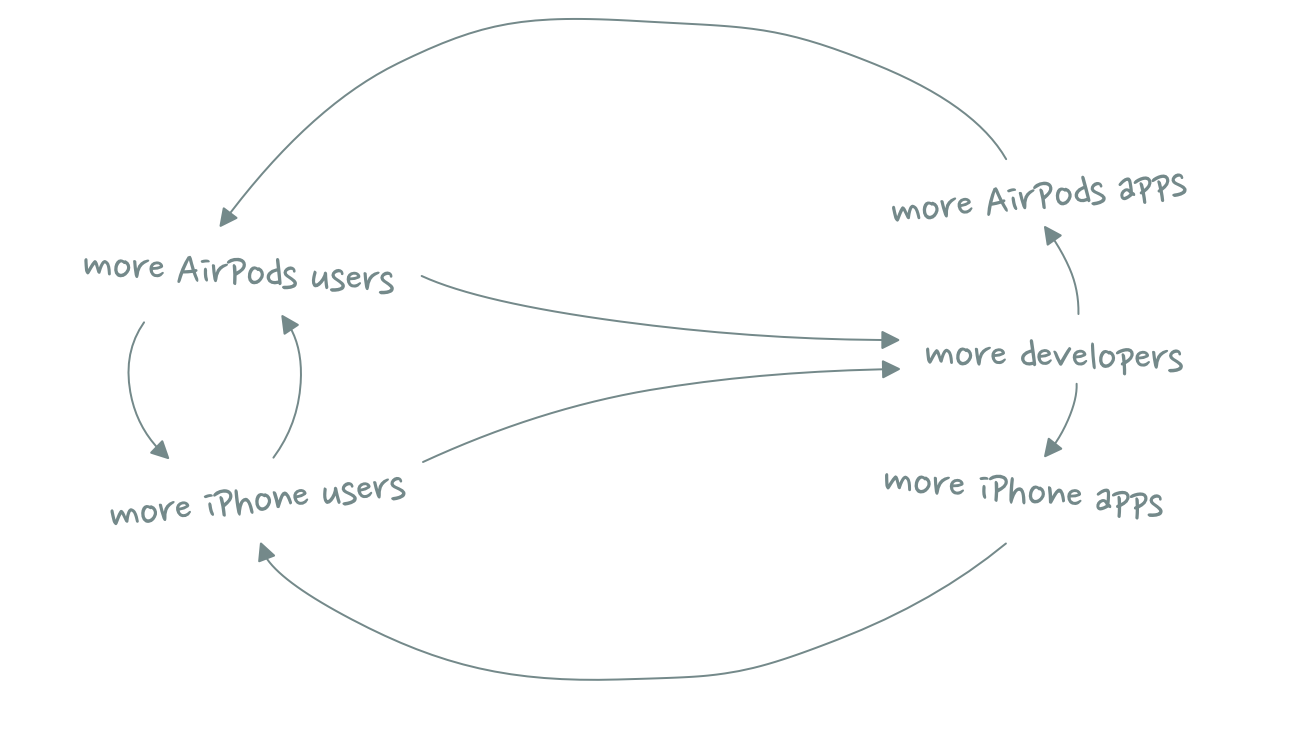

Platforms are subject to network effects: More applications attract more users to the platform, while more users in turn attract more developers who build more apps.

It’s a classic flywheel effect that creates powerful winner-takes-all dynamics. This explains why there are only two (meaningful) mobile operating systems – iOS and Android.

It also explains why everyone is so interested in upcoming platforms – and why Apple might be interested in making AirPods a platform.

03 Why AirPods aren’t a platform

In their current form, AirPods are not a platform. They don’t provide any unique input or output functionalities that developers could leverage. Active Noise Cancellation and Transparency Mode are neat but not new or Airpods-exclusive features – other headphones offer exactly the same. In either case, developers don’t have any control over them and thus can’t build applications that use these functionalities.

Some say that AirPods will give rise to more audio apps because they are “always in” which in turn will lead to more (and perhaps new forms of) audio content. That might be true – content providers are always looking for alternative routes to get consumers’ attention – but, again, it does not make AirPods a platform. You can use any other pair of headphones to use these audio apps as well.

If Apple wants to make AirPods a platform, it needs to open up some part of the AirPods experience to developers so that they can build new things on top of it.

04 On Siri & Voice Platforms

The most obvious choice here is Siri, which is already integrated into every pair of AirPods.

In contrast to other voice assistants like Alexa and Google Assistant, Apple has never really opened up Siri for 3rd-party developers. If they did, it would create a new platform that could have its own ecosystem of apps and developers.

But I’m not convinced that this is Apple’s best option.

Let me explain why.

Opening up Siri wouldn’t make AirPods a platform, it would make Siri a platform. This might sound like a technicality, but I think it’s an important difference. As Jan König brilliantly summarized in this article, voice isn’t an interface for one device – it’s an interface across devices. It’s more of a meta-layer that should tie different products together to enable multi-device experiences.

This means Apple has little interest in making Siri an AirPods-exclusive. Voice-based computing works best when it’s everywhere. It’s about reach, not exclusivity. This is part of the reason why Google and Amazon excel at it.

At the moment, Siri’s capabilities are considerably behind those of Google Assistant and Alexa. Again, this isn’t overly surprising: Google’s and Amazon’s main job is finding the right answers to users’ questions. The required ML capabilities for a smart assistant are among the core competencies of these two companies.

But even Amazon and Google haven’t really figured out the platform part yet, as indicated by the lack of breakout 3rd-party voice applications. It seems like the two platforms are still looking for their product-market-fit beyond being just cheap speakers that you can also control with your voice.

This is partly because the above-mentioned use case of voice as a cross-device layer isn’t something developers can build with the current set of APIs.

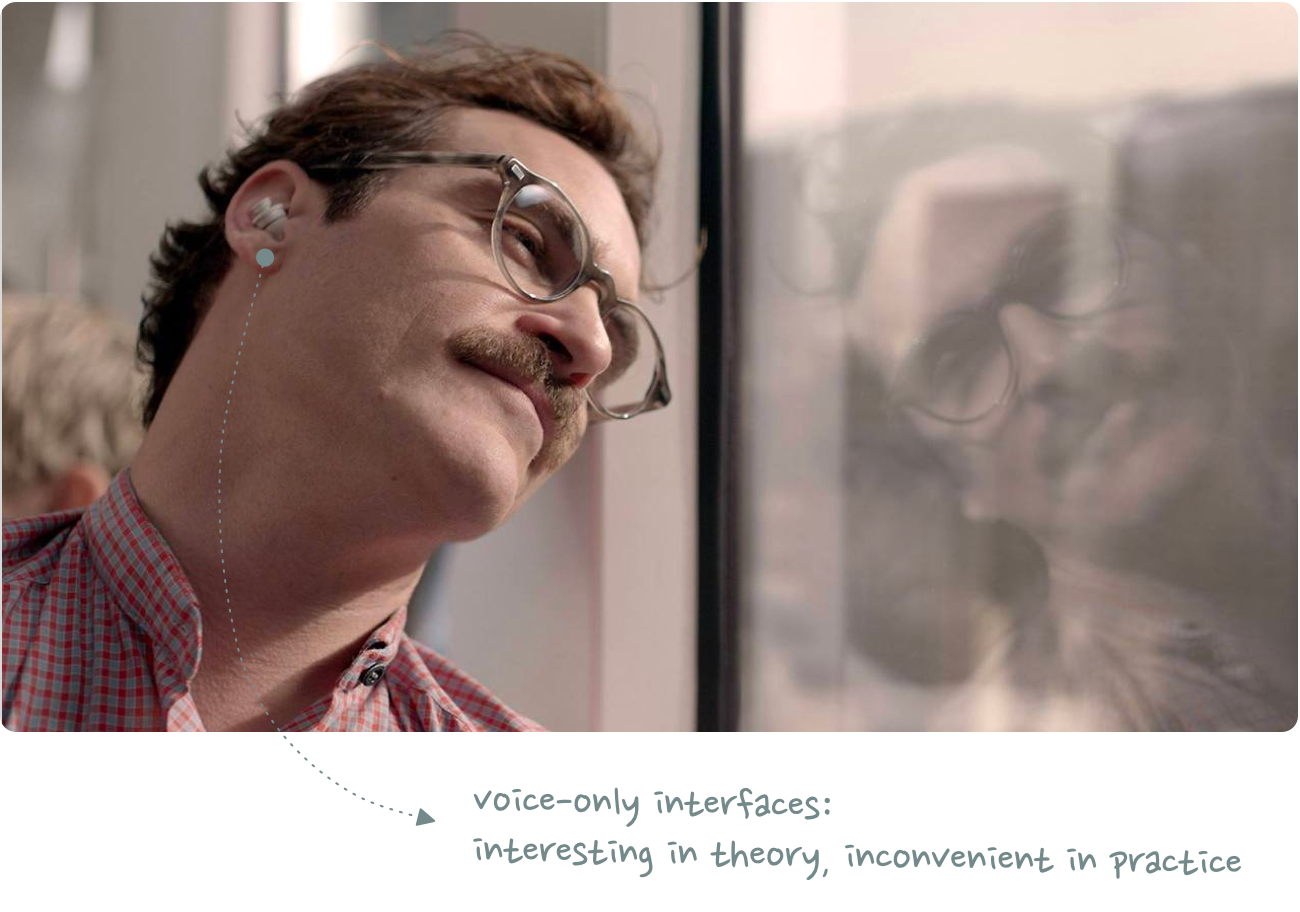

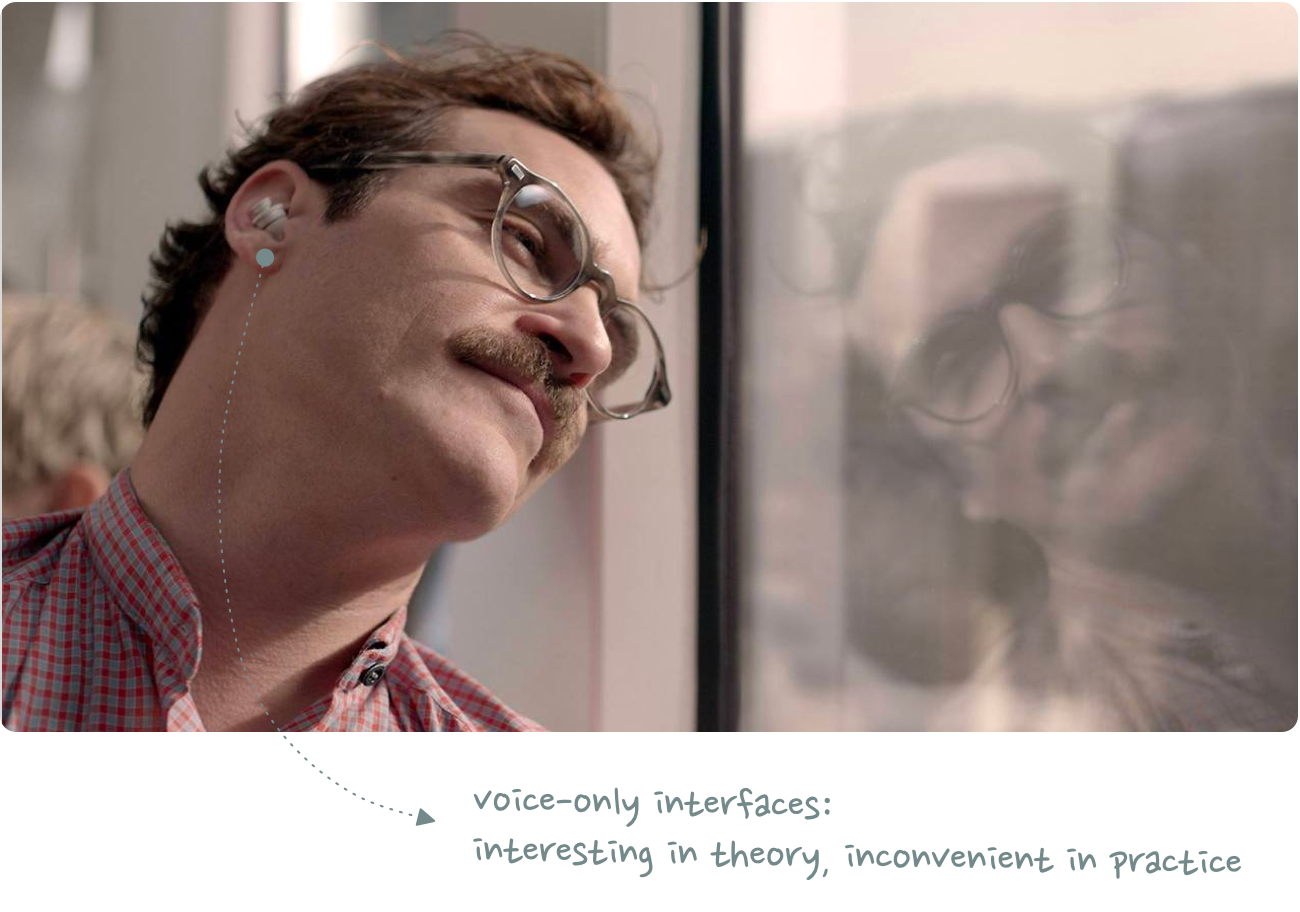

The other big reason I see is that people are mistaking voice as a replacement for other interfaces. Movies like Her paint a future where human-computer-interaction primarily occurs via voice-powered smart assistants, but in reality, voice isn’t great as a primary or stand-alone interface. It works best as an *additional* input/output channel that augments whatever else you are doing.

Let me give you an example: Saying “Hey Google, turn up the volume” takes 10x longer than simply pressing the volume-up button on your phone. It only makes sense when your hands are busy doing other things (kitchen work, for example).

The most convincing voice app I have seen to date was at a hackathon where a team used the StarCraft API to build voice-enabled game commands. Not to replace your mouse and keyboard but to give you an additional input mechanism. Actual multitasking.

05 What Apple Should Build

I’m not against Apple opening Siri for developers. On the contrary, given that AirPods are meant to be worn all the time, a voice interface for situations that require multitasking is actually a very good idea. But voice input should remain the exceptional case. And it shouldn’t be what makes AirPods a platform.

Instead of voice, I’d love to see other input mechanisms that allow developers to build new ways for users to interact with the audio content they consume.

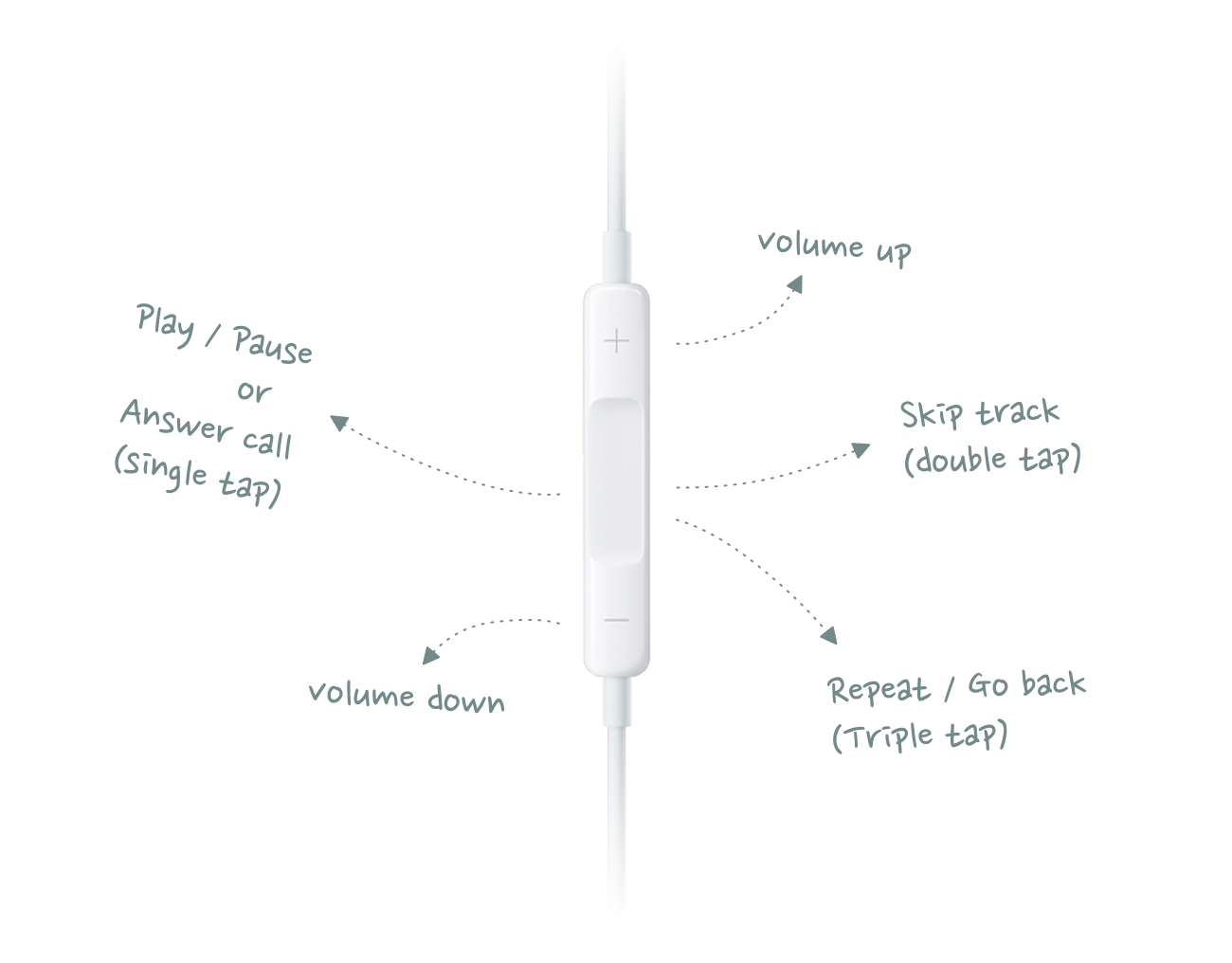

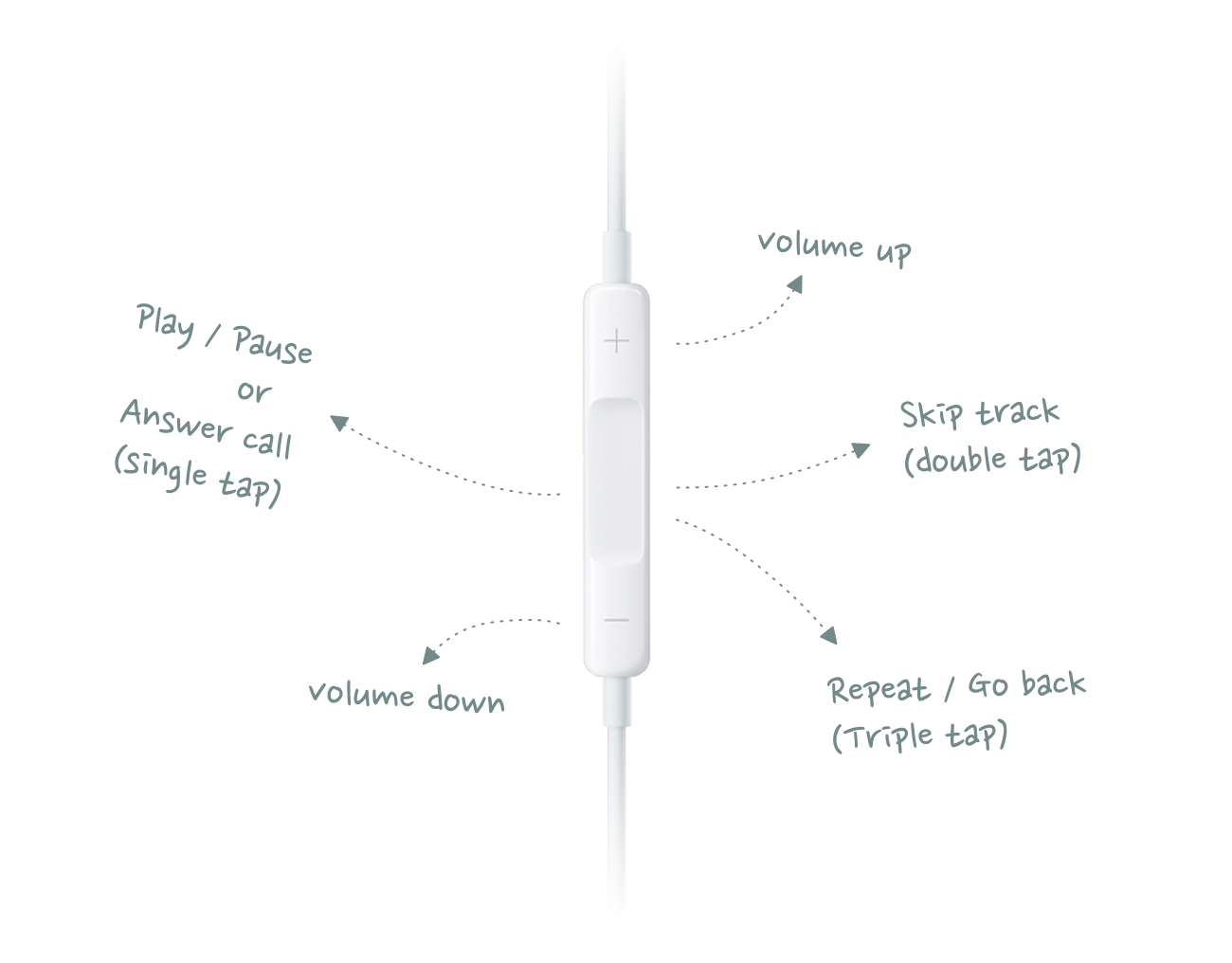

Most headsets currently on the market offer the following actions with one (or multiple) clicks of a physical button:

These inputs were invented a long time ago and there has been almost zero innovation since. Why has no one thought about additional buttons or click mechanisms that allow users to interact with the actual content?

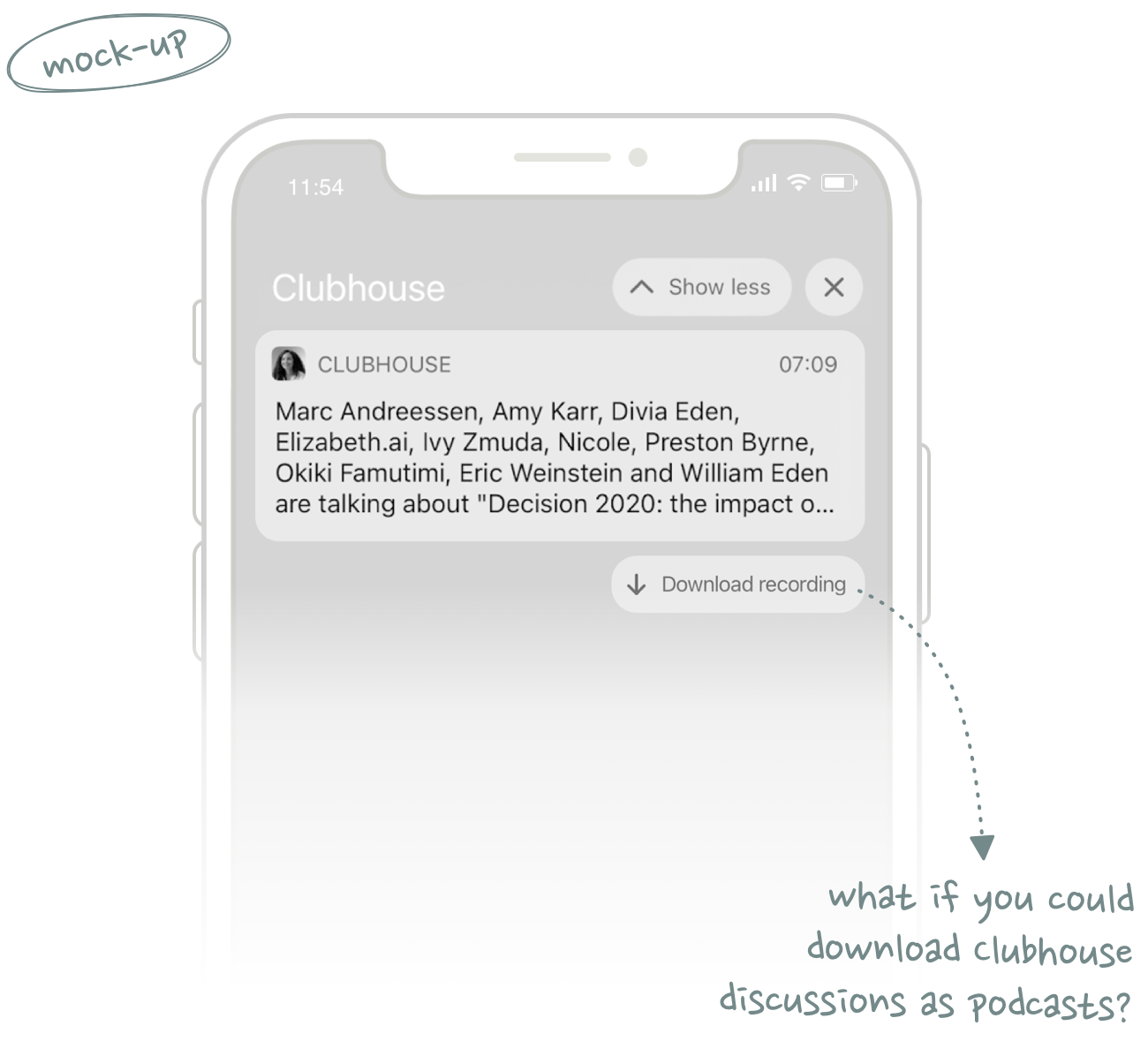

For example, when listening to podcasts I often find myself wanting to bookmark things that are being talked about. It would be amazing if I could simply tap a button on my headphones which would add a timestamp to a bookmarks section of my podcast app. (Or better even, a transcript of the ~15 seconds of content before I pressed the button, which are then also automatically added to my notes app via an Apple Shortcut.)

Yes, you could build the same with voice as the input mechanism, but as we discussed earlier, saying “Hey Siri, please bookmark this!” just doesn’t seem very convenient.

While podcast apps might use the additional button as a bookmarking feature, Spotify could make it a Like button to quickly add songs to your Favorites playlist. Other developers could build completely new applications: Think about interactive audiobooks or similar two-way audio experiences, for example.

This is the beauty of platforms: You just provide developers with a set of tools and they will come up with use cases you hadn’t even thought about. Crowdsourced value creation.

06 Closing Thoughts

(1) The input mechanism I describe doesn’t have to be a physical button. In fact, gesture-based inputs might be even more convenient. If AirPods had built-in accelerometers, users could interact with audio content by nodding or shaking their heads. Radar-based sensors like Google’s Motion Sense could also create an interesting new interaction language for audio content.

(2) You could also think about the Apple Watch as the main input device. In contrast to the AirPods, Apple opened the Watch for developers from the start, but it hasn’t really seen much success as a platform. Perhaps a combination of Watch and AirPods has a better chance of creating an ecosystem with its own unique applications?

(3) One thing to keep in mind is that Apple doesn’t really have an interest in making AirPods a standalone platform. The iPhone (or rather iOS) will always be the core platform that Apple cares about. Instead of separate iPhone, Watch and AirPods ecosystems, think about Apple’s strategy as more of a multi-platform bundle. Even as a platform, AirPods will remain more of an accessory that adds stickiness to the existing iPhone ecosystem.

Do you have thoughts or feedback on this post?

If so, I’d love to hear it!

Thanks to Jan König for reading drafts of this post.